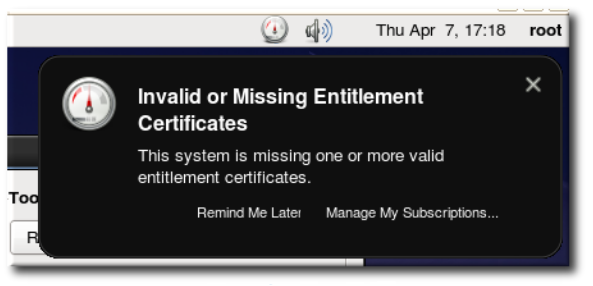

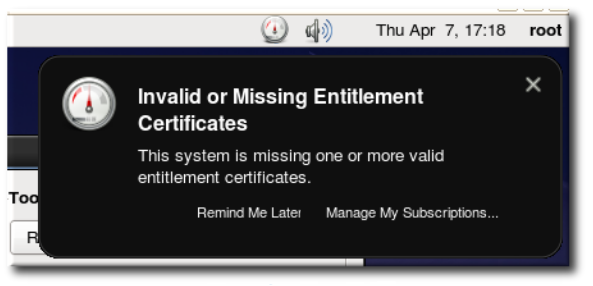

Last night, we published the blog post presenting the work I lead

during the past few months, where we removed the need to deploy RHEL

entitlement certificates to build and deploy the GPU driver of NVIDIA

GPU Operator. This requirement for a valid RHEL subscription key was a

major burden for OpenShift GPU computing customers, as the key

generation and deployment process couldn’t be properly automated.

This work was a great cooperation effort, as it required the

enhancement of multiple parts of the overall software stack:

- first at the team level with enhancements of the Node Feature

Discovery (Eduardo Arango) and the OpenShift Driver Toolkit

container image (David Gray and Zvonko Kaiser) + Ashish Kamra

- then at the project level, with core OpenShift corner-cases bugs

discovered and solved, revolving around the Driver Toolkit dynamic

image-streams,

- finally inter-company Open Source cooperation, with NVIDIA

Cloud-Native team (Shiva Krishna Merla) reviewing the PRs with

valuable feedback and bugs spotted in the middle of the different

rewriting of the logic!

Timeline of the project milestones (in 2021):

-

May 27th..June 1st: idea of using the Driver Toolkit for

entitlement-free arises from Slack discussion, to solve disconnected

cluster deployment challenges. Confirming quickly that with minor

changes, the DTK provides everything required to build NVIDIA

driver.

-

July 30th..August 11th: working POC of the GPU Operator building

without entitlement, without any modification of the operator, only

a bit of configuration + manually baked YAML files

-

August 26th..~November 15th: intensive work to add seamless upgrade

support to the POC and get it all polished, tested and merged in

the GPU Operator

-

December 2nd: GPU Operator v1.9.0 releases, with the

entitlement-free deployment enabled by default in OpenShift \o/

It’s funny to see how it took only a couple of days to get the first

POC working, while the integration of the seamless upgrade support

took two full months of work!

(Seamless upgrade support is the idea that at a given time during the

cluster upgrade, different nodes may run different versions of the

OS. With one-container-image-for-all-os-versions, no worry, the driver

deployment will work all the time; but with

one-container-imager-per-os-version, that’s another topic! This is

covered in-depth in the blog post.)